By Vitalii Oren

7/22/2025

Scraping Agent Manual 🤖

🤖 What is Scraping Agent?

- Agent extracts the URL structure and generates a reusable scraping script based on a successful Extractor Case.

- It uses an LLM only once - during code generation - which makes it ideal for scaling, as each Agent scraping request doesn't include LLM processing costs, unlike the Extractor.

- Since AI Scraping Agents don’t rely on LLMs, they are more cost-effective and free from LLM hallucinations. However, they cannot scrape unstructured content like article text or generate summaries or insights from the page.

🔎 When should I use Agent?

- Scaled Scraping: When you need to collect the same type of information from many pages with the same layout—ideally from a single website where the data structure is identical across pages (e.g., scraping 10,000 product pages from www.farfetch.com).

- Monitoring: When you're regularly collecting the same type of data from one page (e.g., scraping events from www.meetup.com every 24 hours).

⚙️ How to set up Scraping Agent?

Step #1: Extract Data

- Use Extractor: Set and submit an Extractor Request.

- Double-check: Run several test extractions to ensure the results are accurate and consistent.

‼️ You can only scrape data from the specific page whose URL you provide, not the entire website

Step #2: Build Agent

- If you're satisfied with the data returned by the Extractor after a few runs, click

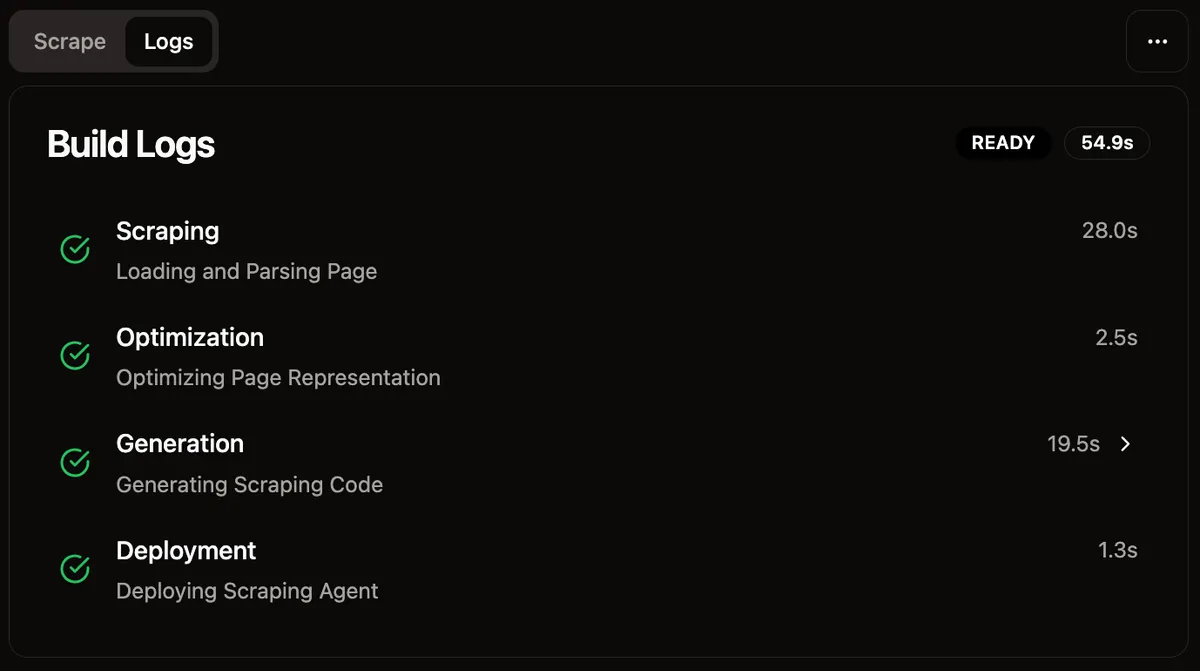

Create Agenton Extractor page to build a custom agent. - Go to the Agent page and wait until the status changes to

Ready. You can monitor the process onAgent Logstab. - After the Agent is created, we recommend testing it several times on different URLs.

‼️ If the Agent doesn’t return consistent results during testing, try rebuilding it — bugs can sometimes occur during the code generation stage.

Step #3: Automate

- Automation Tools (n8n, Zapier, Make): Run AI Scraping Agents on thousands of URLs as part of your workflow in n8n, Zapier, or Make

- API: Send a batch of URLs via API.