Prompt to

scrape any URL

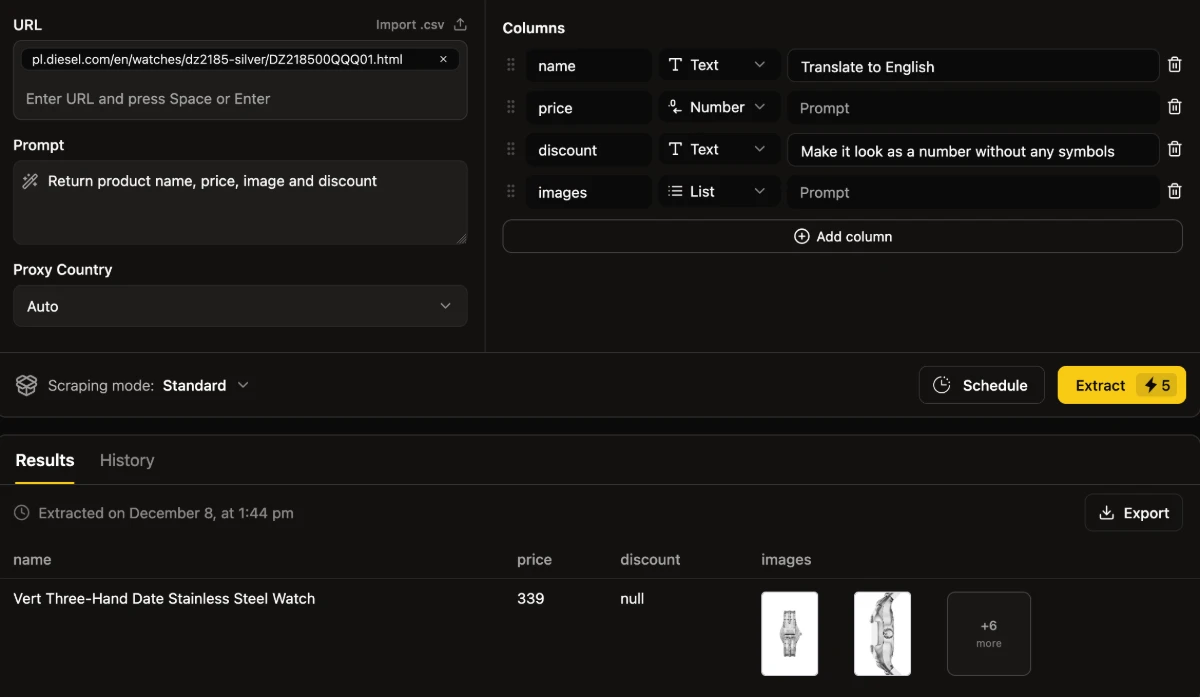

Apply simple human instruction to scrape data at scale from any layout

HOW IT WORKS?

3 easy steps to extract any data

Select

Input URLs or Select from Website Catalog

Describe

Give me the products' titles, prices, and discounts

Give human-like instructions to get data

Extract

Extract Instantly or Schedule recurrent extraction

USE CASES

Scrape what matters

E-commerce Listing Details

Get all the info about your competitors' products

Real Estate Information

Analyze the real estate market to get the best deal

Lead Generation & Competitors Research

Gather all the contact info about your prospect leads

Insights from News

Analyze policy, economy, niche, using only relevant info

| quote | context | quoter |

|---|---|---|

| By a factor of 2 to 1, you want a new political party and you shall have it! | Referencing that poll in his post on Saturday, Musk wrote: | Musk |

| When it comes to bankrupting our country with waste |& graft, we live in a one -party system, not a democracy. | Referencing that poll in his post on Saturday, Musk wrote: | Musk |

| Today, the America Party is formed to give you back your freedom. | Referencing that poll in his post on Saturday, Musk wrote: | Musk |

| big, beautiful bill | The legislation - which Trump has called his | Trump |

| Elon may get more subsidy than any human being in history, by far, | Trump wrote on his social media site, Truth Social, this week. | Trump |

| Without subsidies, Elon would probably have to close up shop and head back home to South Africa. | Trump wrote on his social media site, Truth Social, this week. | Trump |

FEATURES

Features for every Use Case

Use Website Catalog

Browse and select Pages directly from any Sitemap

Generate Scraper

Create scraping Code you can scale and reuse Instantly

Schedule Scraping

Specify Data targets and automate its Extraction

VIDEO TUTORIALS

Quick How-to Videos

Scrape Hundreds of Pages

Scrape in n8n, Make, Zapier

Pricing

Pay only for the scraping actions you actually need

AI Extraction

Extract any data from any URL

5 credits / per Extract

Scraper Generation

Build your own reusable Scraper (generate scraping code)

50 credits / per Scraper

Scraping

Scrape pages with the generated Scraper

1 credit / per Scrape

HTML Parsing

Parse HTML with the generated Scraper (Enterprise Only)

25 credits / per 1k parses

Frequently Asked Questions

You asked.

We answered.